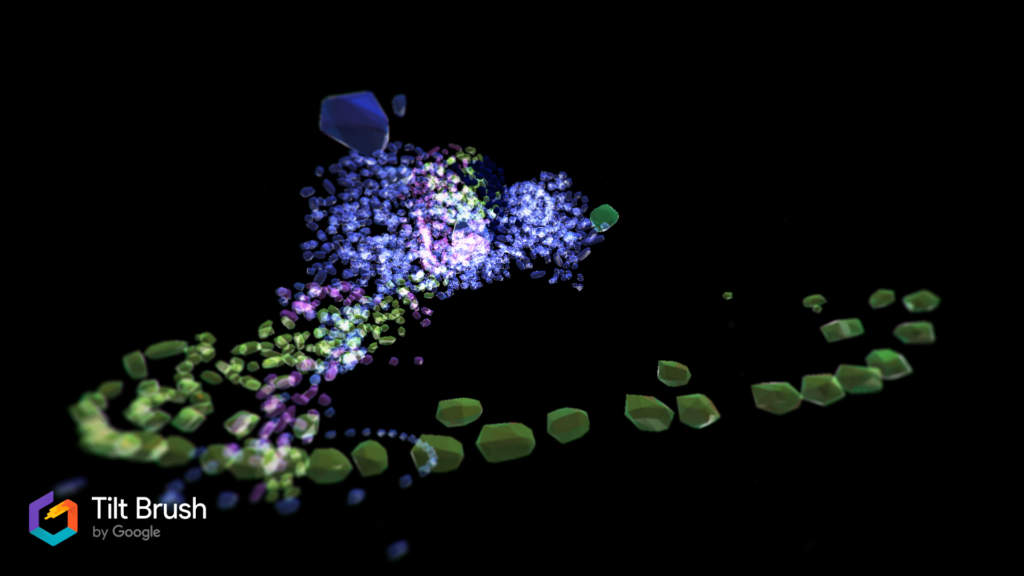

moonshot: solos for your home is an Augmented Reality (AR) app that places a more than human entity (cyborg body) in the home of the user. The concept models for the piece were created through embodied practice in Tilt Brush from transparent prisms to create the 3D model for moonshot’s posthuman representation.

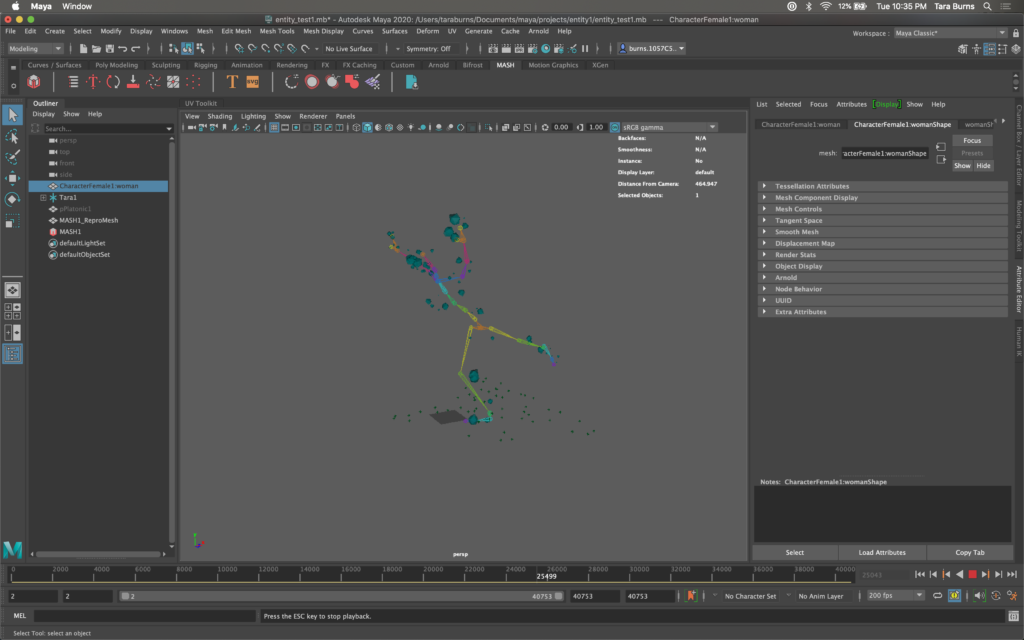

Choreographing and performing a solo in a motion capture session and connecting that movement to a fluctuating polygon 3D Model (Maya) before bringing it into Unity to create functionality on a device.

Transposing the concept model between Tilt Brush and Maya had some flaws. It is possible, but the poly count of the Tilt Brush model was too high, meaning too much processing power would be necessary to render them and make them visible and run smoothly in the application. I am still interested in trying to do this and believe it can be done if the Tilt Brush model created was smaller and less elements. Once the Tilt Brush model is converted to an fbx file and imported into Maya, I would “paint” the model to assign the area of the skin I wanted to be activated by each portion of the model: https://youtu.be/_m5M0SD1BBk. However, for this version, with advice from Vita Berezina-Blackburn, I was able to come up with an alternate method of creating a 3D model that used similar visuals using MASHes in Maya.

In creating the AR App using motion capture data, I have did the following:

- Imagined a Tilt Brush 3D Model

- Exported to SketchFab

- Converted a GLTF to FBX (using Blender courtesy of Shadrick Addy)

- Imported to Maya

- Choreographed and performed a solo in motion capture and an improvisation based on a poem

- Retrieved the motion capture data (courtesy of Vita Berezina-Blackburn)

- Used MASH with Maya to create the animated 3D polygons that shift and changed depending on the movement of the motion capture data:

- When recreating the idea of the Tilt Brush painting in Maya, Vita brought this tutorial to my attention: Used this tutorial to create an alternate MASH version of my Tilt Brush 3d model: https://www.youtube.com/watch?v=JN-56EBz5q

- To MASH it seems it requires you to attach the mesh to skin and not just a data skeleton, so I opened some biped prefab skin (downloaded from Mixamo) in Maya & my motion capture data in Maya

- Throughout the process these tool definitions from Maya resources were helpful: https://knowledge.autodesk.com/support/maya/learn-explore/caas/CloudHelp/cloudhelp/2018/ENU/Maya-MotionGraphics/files/GUID-26DAFCA0-C713-4E50-8736-44A803EE87B3-htm.html

- I used this tutorial to skin my data quickly: https://www.youtube.com/watch?v=LaO3wNXADw8

- I am not worrying about cleaning the motion capture data at this time, but when/if I want to, I will use MotionBuilder.

- To export

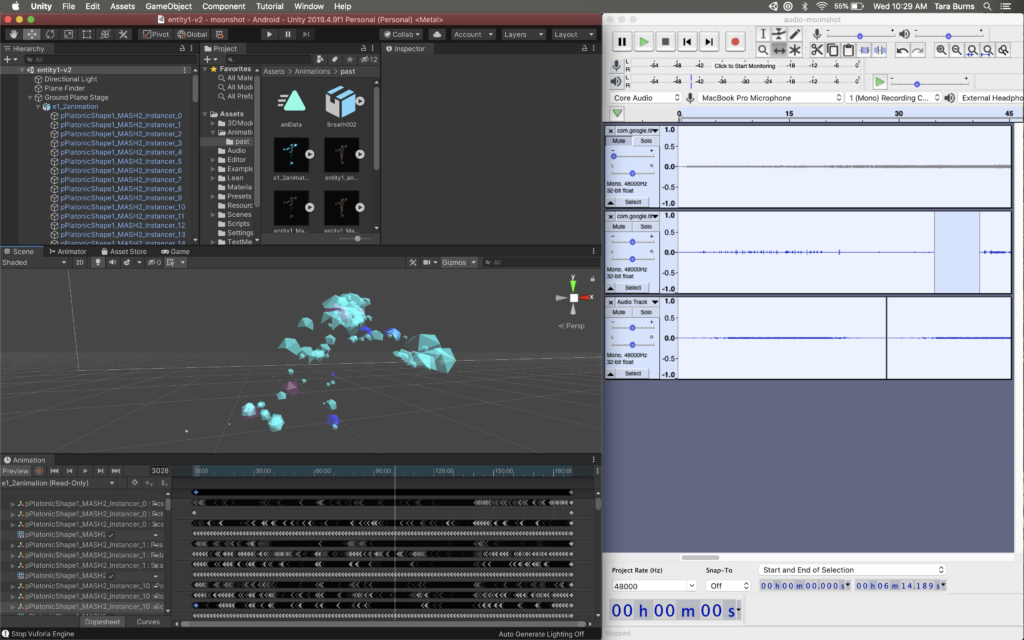

- You have to BAKE the MASH: https://forums.autodesk.com/t5/maya-forum/is-it-possible-to-bake-mash-mesh-animation-to-an-fbx-export-maya/td-p/6750329

- AND highlight the animation keyframes: https://www.google.com/search?client=firefox-b-1-d&q=export+to+unity+model+with+motion+data+from+maya#kpvalbx=_7sktYIGoA9CPtAaN96yYAw30

- The link right above also helps when importing and attaching the animation in unity if needed

Further work in Unity included creating an interface and instructions for use, using LeanTouch to Scale and Zoom the entity with a touch screen, and connecting the soundtrack I created from distortions of the brushes in Tilt Brush.

This application places the 3D model and connected motion capture solo in the user’s space. When encouraging the user to interface with the entity, I am exploring audience as performer and archive as performance. The audience becomes the performer by engaging with an AR entity in the space through watching from a distance, moving around 360º, or sitting immersed in the body of the soloist. The choreography and movement of the dancer were archived through motion captured choreography. By placing this danced archive in the hands of the user, I am questioning the essence of performance and exploring alternative performance venues.

Moving forward, the app will be modified to house multiple solos. Dancer/Choreographers Kylee Smith, Yukina Sato, Menghang Wu, and Jacqueline Courchene are currently in the process of performing their choreography for motion capture recording. I will collaborate with each of these choreographers to continue this investigation surrounding artifacts as performance and an application as a performance platform including new bodies and perspectives.